Hell hath no fury for a marketer like a manual penalty from Google. As an SEO expert, I often find myself cleaning up issues for clients who find themselves at the mercy of penalties. Often, these penalties stem from actions taken by black hat SEO experts who promise the world, yet deliver little in the way of real organic growth.

Google penalties are notoriously difficult to overcome. Depending on the nature of the penalty, it can take weeks, months, or even years to fully bounce back. This is one case where an ounce of prevention is honestly worth a pound of cure.

I believe that knowledge is power, especially when it comes to navigating Google’s complex SEO rules. To help you better understand penalties, I’m going to break down a few of the most common. We’ll end with an overview of one penalty webmasters overlook far too often.

Hacked or Exploited Websites

Web technologies (like HTML, CSS, ASP, etc.) are much more evolved than they once were, making them more secure. But vulnerabilities like broken authentication, session exploiting, SQL injection, and guessed passwords/logins all still exist, as does Cross Site Scripting (XSS) and remote scripting.

Google can (and often does) sandbox hacked websites for containing exploits, phishing attempts, or malware. Your site will still show in results for a time, but when visitors attempt to follow the result, they see a red box with a warning instead of visiting your site. This can be devastating to a website with a significant following.

The best way to prevent a penalty from unauthorized access is to monitor your site regularly for symptoms. Deal with exploits lightning-fast, even if it means taking your site offline for a time. Most importantly, be proactive by practicing good webmaster security strategies at all times.

Keyword Overuse

Keywords: depending on who you ask, they’re either the worst or the best thing to happen to the SEO industry.

The problem?

Too many webmasters and “SEO experts” use keywords incorrectly or inefficiently. They stuff them into hastily-written text in percentages as high as 10 or 12 percent, thinking that more is better – and then find themselves swiftly sandboxed from Google for SERP manipulation.

Older-generation SEO experts actually valued using high percentages of keywords; it was an effective way to rank at the time. Google’s Penguin update did away it, devaluing the use of keywords in comparison with other more organic strategies that fostered valuable, well-matched content.

How much is too much? While there’s no magic number for how much is too much, generally, if you have to think about it, you’re probably trying to hard to fit them in. Structure your content around categories and specific niche topics, not keywords; stop worrying as much about hitting a percentage as you do about crafting high-quality content with great use value.

Markup Manipulation or Spam

Markup manipulation is a serious issue, but it can also be seriously confusing for webmasters. We hear stories about well-intentioned webmasters logging in one day and suddenly seeing this message on their Analytics dashboard:

Markup on some pages on this site appears to use techniques such as marking up content that is invisible to users, marking up irrelevant or misleading content, and/or other manipulative behavior that violates Google’s Rich Snippet Quality guidelines

Whew. That’s a mouthful! It’s not hard to see why some webmasters get confused about what this message actually means. Let’s break it down so we can better understand it.

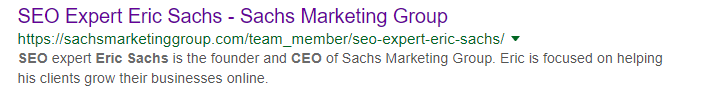

First, let’s talk Rich Text Snippets (RTS). RTS isn’t an inherently negative strategy; in fact, Google created it to help webmasters showcase their content on search results more clearly. An RTS is just the short, ~160-character description paired with links on Google’s search results.

Webmasters have the ability to define a specific RTS using markup, or they can let Google parse something automatically from the page. Generally, it’s better to define your RTS because it gives you more control.

Take a look at our RTS for Sachs:

Short, sweet, and to the point – exactly how it should be.

Where RTS becomes a problem is when webmasters use markup to define an RTS that’s either deceptive or somehow intended to manipulate searcher behavior. Misleading visitors with markdown (for example, putting “click here for free money!!” in your RTS, even though it leads to a product page) is a clear violation example.

If you plan to use RTS, be sure they’re on-topic and don’t mislead visitors. Be exceptionally careful with website plugins like Yoast that may base RTS selection on imperfect algorithms; these plugins can sometimes choose the wrong RTS, and should always be manually reviewed before publishing.

Spammy or Purchased Backlinks

Google sees backlinks as proof people find your content useful enough to link to; theoretically, they expect these backlinks to be genuine and not bought, borrowed, begged, or stolen. But as we all know, the Internet is a bit like the Wild West, and if there’s a way to exploit something, someone will find a way to make it happen.

Enter the era of guest blogging, backlink networks, and for-pay backlinks. Entire websites cropped up during the mid ‘00s; their sole intention was to link to websites for pay or some other benefit. These large-scale blog networks and link farms often appeared legitimate, at least at first glance, but upon scrutiny, often contained thin, duplicate, or spun content. Their sole purpose was to manipulate SERPs by inflating backlinks for customers.

Over time, it became very obvious that webmasters were abusing this tactic. Some purchased thousands of links, temporarily boosting their PageRank (when PageRank still existed in numerical format).

But as with any other strategy that seems a little too easy, the search engine giant caught on. Google Penguin successfully caught and sandboxed more websites for this transgression than ever before, changing the entire industry in the process.

So, what’s the best way to prevent a manual penalty for spammy backlinks? Don’t buy, borrow, beg, steal, or ask for backlinks, full stop. Backlinks aren’t all bad, but they should never be something you’re paying for – they should be earned if you want to see real, organic results. Create useful content with viral attributes that people want to link to because it’s so useful, and the rest will happen naturally.

Most importantly, If you bought spammy backlinks in the past, disavow the links now using this tool.

Over-Reliance on Reciprocal Links

This is a segue from the previous section, but it bears further explanation. Reciprocal linking (trading link-for-link with other websites) might seem innocent, but Google considers this deceptive SERP manipulation, too. Technically, it’s a form of spammy or manipulative backlinks, even if it’s just a one-off with a friend.

Anytime you make an agreement with someone to trade links, Google sees it as technically trying to manipulate SERPs. If you do it too often, you will eventually find yourself penalized or sandboxed.

Does that mean strategies like guest blogging or trading the occasional link with a partner is totally out? Probably not. A one-off or the occasional guest blog on high-authority sites isn’t usually a problem.

The same standard rule applies: make sure you’re creating content of value and trading links with sites that actually make sense because they’re helpful, not just because they’ll boost your rank. Linking a plumbing service website to your healthcare business won’t help you and might even harm you instead.

Too Many 404s

Websites change over time – that’s just a fact of life. Whether you update the content, refresh it, remove it, or just change your navigation, some links may still lead visitors to the older removed pages. When they attempt to access that removed content, the server returns an HTTP 404 Not Found Error, advising them that it could not locate the requested information.

404 errors aren’t necessarily negative; you should use them temporarily when changing your website or altering pages and content. But too many 404s can confuse web crawlers, making them think your website is full of old, outdated content. Worse yet, it can also look like you have no real content at all if there’s enough 404s.

Exactly how much of an issue is this? You can’t avoid all 404s, really – nor should you try to deceptively avoid them. A few one-off instances here and there won’t hamper your SEO efforts, but 10+ 404s at the same time might. In rare circumstances, this could lead to a manual penalty from Google because your site is too difficult or cumbersome to crawl.

Controversially, not everyone in the SEO agrees that having excessive 404 error pages influences SEO in any meaningful way. Some still believe having a 404 is better than leaving old content in place, where it may harm your campaign. Either way, there’s one fact we can all agree on: 404s increase bounce rates because visitors are more likely to leave your site in search of the content they want. A high bounce rate won’t result in a manual penalty, but it will affect your ranking negatively in other ways. Correct them as quickly as you can, using these best practices, as often as possible.